In the ever-evolving landscape of artificial intelligence (AI) and machine learning, the art of crafting effective prompts has emerged as a cornerstone for engaging with advanced language models like GPT-3 and GPT-4. Much like finding the right key for a lock, selecting the perfect prompt is a mix of skill and trial-and-error. This challenge led to the birth of ‘Prompt Wizard’ – a tool designed to streamline the process of creating and evaluating prompts.

Understanding Prompt Wizard

The genesis of ‘Prompt Wizard’ lies in the increasing need for tools that simplify and automate the prompt evaluation process. Based on Python, this library takes its roots from the resources available at this GitHub repository. It is meticulously architected to include essential components for prompt evaluation and generation, making it accessible for users of varying technical backgrounds.

Key Features at a Glance

-

Custom Prompt Evaluation: ‘Prompt Wizard’ shines in its ability to evaluate and refine custom prompts. Users can either input their own prompts or let the tool generate them, iterating over the best ones for superior outcomes.

-

Data-Driven Insights: The results of prompt evaluations are neatly stored in JSON files, ensuring easy access and analysis.

-

User-Friendly Interface: Beginners can start by inputting a YAML file defining test cases and evaluation parameters, and the library handles the rest, showcasing its adaptability across different methods and language models.

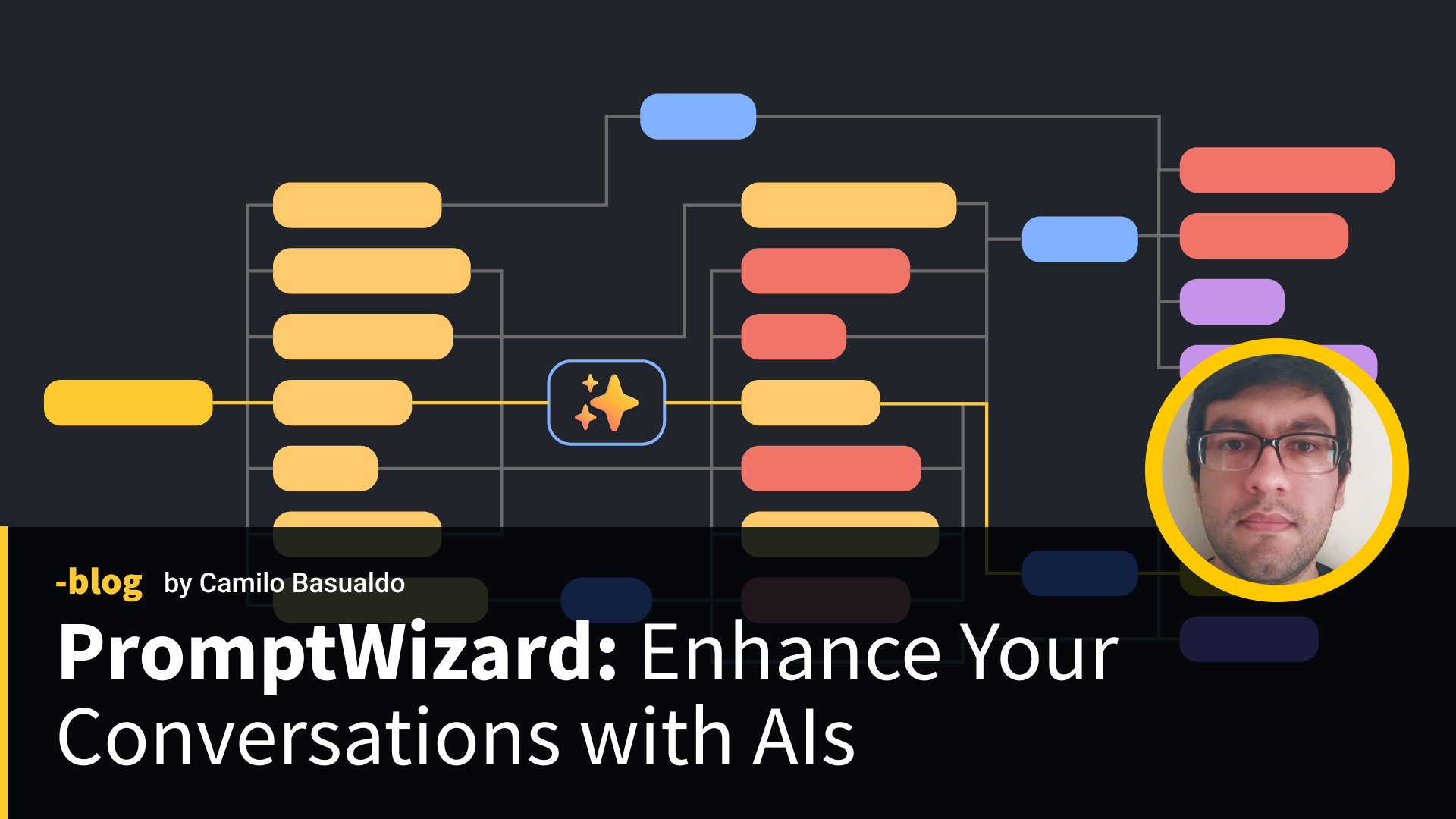

Practical Application Example: Creating Functions in Python or JavaScript

Let’s imagine we have the task of creating functions in Python or JavaScript to solve specific problems. Initially, we use our PromptWizard tool to generate 10 prompts that will guide the creation of these functions. We then evaluate these 10 prompts using a set of 20 tests to measure their effectiveness. To evaluate a prompt, we simulated what a conversation would look like by using the prompt we generated as the system prompt and treating the user-generated message as our test case. For example, consider the instruction, “Write a Python function that finds the maximum number in a list.” Once we have the generated response, we check if the answer is a valid Python function. We then execute this function using the test arguments we defined in our test case, which, in the example above, might look like “[10, 25, 7, 45, 32]”. We verify that the result matches the expected outcome, also defined in our test case, in this case being “45”. If the result was correct, the prompt successfully passed the test case.

Subsequently, we rank the prompts based on how many test cases they successfully passed, and then, we identify the top 3 prompts in terms of their ability to generate correct answers.

Iterative Process Explained

After our first iteration

In the first iteration, we take these top 3 prompts and use them as a reference to generate 7 new prompts using the language model. These 7 new prompts are added to the original set. Then, we evaluate these 7 new prompts with the same set of 20 tests. Finally, we combine the results of the 7 new prompts with those of the previous top 3, and re-rank them to identify the new top 3 prompts for the next iteration. Our goal is to enhance the quality of the responses.

After our second iteration

In the second iteration, we continue the process. We take the top 3 prompts from the previous iteration and generate new prompts. Once again, we evaluate these 7 prompts with the same test cases, combine the results with those of the previous top 3 and rank them again to determine the new top 3 prompts. The aim is to refine the quality of the prompts further.

Results and Conclusion

After about 3 iterations in our example use case, we have identified and fine-tuned the top 3 prompts for the task of creating functions in Python or JavaScript. These prompts have significantly improved their ability to generate accurate and effective responses for the specific test cases used in the evaluation. We observed that further improvement at this stage of iterations had become insignificant, and we decided not to continue. Prompt iterations are an effective strategy for improving the quality of responses generated by language models in specific tasks. This approach allows us to fine-tune and optimize the prompts used in interacting with language models, thereby enhancing the accuracy and effectiveness of responses. That’s why we created PromptWizard, to provide an easy way to tackle this challenge. If you want to achieve optimal results in tasks that involve interacting with language models, do not underestimate the power of prompt iterations. For our example task, we found that about 3 iterations were sufficient, but the number of iterations required may vary depending on the task and the quality of the initial prompts used for the iterations.

Final Thoughts

The realm of AI conversations is intricate, and ‘Prompt Wizard’ is a testament to simplifying this complexity. By harnessing the power of prompt iterations, you can significantly enhance the effectiveness of your AI interactions. We invite you to explore this tool and witness the remarkable improvements in your projects over time. You can access Prompt Wizard through PyPi or clone our Github repository, we also invite you to try other evaluation methods.