After exploring what RAG brought to the table for our custom chatbots, I decided to take a detour into the world of fine-tuning and the Mixture of Experts approach. Why? Because simplicity is key. Let’s dive into how we can train our custom “budgeting expert” and what we can get from this approach.

Link to RAG model - AI Advisor integrated with custom data.

Index

-

Project definition

1.1 Purpose and Scope

1.2 Background and Objectives

1.3 Product Description

1.3.2 Key Features -

Solution

2.1 Scope phasing

2.2 Procedure - Results

- Conclusions

-

Recommendation

APPENDIX 1: Comparative Analysis Table RAG vs. Fine-Tuned Model

1. Project definition

1.1 Purpose and Scope

The primary purpose of this project is to enhance the quality of information provided by our chatbot, focusing on creating a less complex yet effective solution. Initially, a Retrieval Augmented Generation (RAG) model was developed, but its escalating complexity led to exploring the Mixture of Experts (MoE) approach. The scope encompasses the development and fine-tuning of a specialized Large Language Model (LLM) within the MoE structure, such as a “Budgeting Expert.” This single expert model will serve as a test case to evaluate the MoE approach’s effectiveness in delivering high-quality, accurate responses compared to the previous RAG model and the generalist base model. The project aims to achieve a balance between system simplicity and information quality, aiming to enhance the chatbot’s performance with a more manageable and efficient model architecture.

Hypothesis: the creation of topic-specific experts within a MoE will enhance data quality in chatbot interactions. This approach is expected to provide accurate responses tailored to user requirements while maintaining a stable level of system complexity, even as more experts are added for diverse topics.

1.2 Background and Objectives

-

Problem Statement: The primary objective is to elevate the quality of client interactions with our chatbot by ensuring the provision of accurate, reliable, and data-driven responses. At present, a major impediment to achieving this goal is the AI’s propensity for ‘hallucinating’ or generating incorrect information. In our initial approach, we developed a RAG model to mitigate this issue. Nevertheless, as the project scaled up to accommodate a wide range of data types, encompassing both structured and unstructured forms across various topics, the RAG model’s complexity escalated significantly. This complexity is further amplified when the RAG model is tasked with handling user queries differently each time, depending on the type of document retrieved. For instance, a query might be addressed one way when pulling information from structured data such as CSV, and another way when referring to unstructured text. This dynamic approach adds layers of complexity to the RAG model as it needs to be adept at processing and responding to varied data types in contextually relevant ways. In light of these challenges, we are proposing a strategy: fine-tuning a LLM across specific knowledge domains. Additionally, we intend to implement an intelligent question-routing algorithm called orchestrator, which will discern the subject matter of each query and steer it towards the most suitable, specialized LLM. This method, known as the MoE approach, is designed to enhance the precision and reliability of the chatbot’s responses, ultimately fostering greater client trust and satisfaction.

Note: Additionally, we can maintain the utilization of the RAG model by integrating it as one of the experts within the MoE. -

Objectives:

- To enhance the quality of the chatbot’s responses by fine-tuning a single expert model within the MoE.

- To conduct a comparative analysis of the fine-tuned expert model, the original base model, and the RAG model, in order to validate the effectiveness of the MoE approach.

1.3 Product Description

Our product features a cutting-edge chatbot system, utilizing a MoE. This innovative approach marks a significant departure from the RAG method. The MoE excels in delivering customized and adaptable solutions for chatbot knowledge requirements. It leverages specialized LLMs across diverse knowledge domains, ensuring precise and relevant responses to user inquiries.

1.3.2 Key Features

- Specialized Expert Modules: The system comprises fine-tuned LLMs, each an expert in a specific domain like personal finance, budgeting or student loans.

- Scalability and Flexibility: Designed to be scalable, the system can easily accommodate additional expert modules as new needs emerge.

2. Solution

Our strategy involves refining a specialized LLM to function as an expert model, thereby enhancing the response quality of our chatbot. We plan to undertake a thorough comparative analysis, measuring the performance of this fine-tuned expert model against both the current base model and the RAG. This targeted method is designed to directly boost both the accuracy and reliability of the chatbot’s responses. In this section, we will detail our phased approach and the specific procedures for implementing our proposed solution.

2.1 Scope phasing

This project encompasses a series of systematic steps aimed at enhancing the chatbot’s performance through a specialized expert model within the MoE. These steps are designed to ensure the development, integration, and evaluation of the model are executed with precision and effectiveness. The process includes:

- Dataset Creation

- Dataset Preparation

- Model Fine-Tuning

- Model Integration

- Comparison of Models

2.2 Procedure

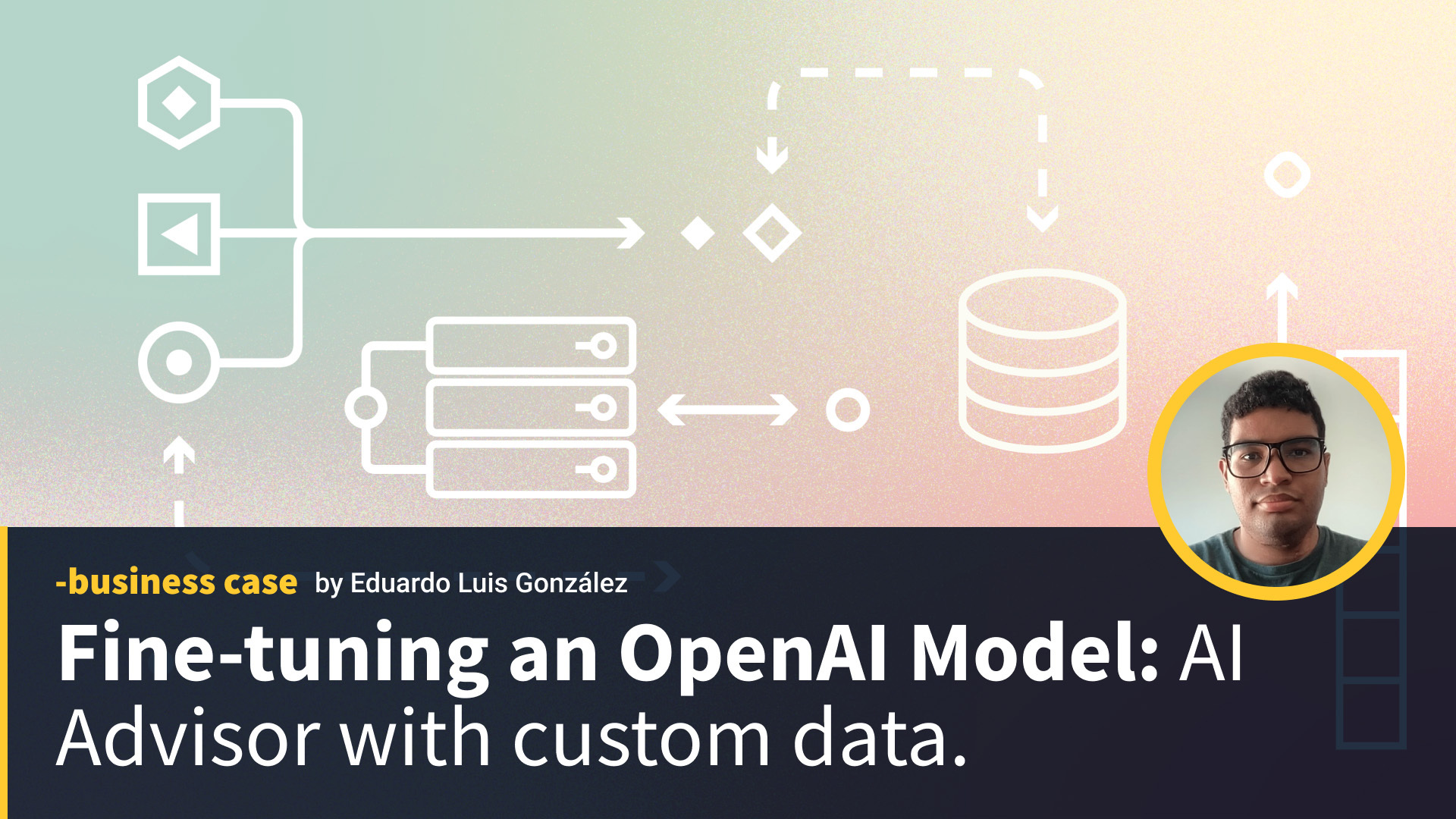

To validate our hypothesis, we plan to fine-tune a LLM on a specific topic, create an expert model, and then compare its responses with those of the base model. Specifically, we intend to specialize the LLM in the area of budgeting, thus developing what we call the “Budgeting Expert”. This approach is clarified in the following diagram, which details the structure of the MoE. This structure underpins the customized architecture of our model, illustrating how the different modules within the LLM are optimized to handle different aspects from financial analysis to more complex analyses.

The procedure involves the following steps:

- Dataset Creation: We select a specific webpage for scraping to generate a dataset consisting of question-and-answer pairs. Leveraging the scraper developed for the RAG model, we construct a dataset in CSV format containing 1,000 such pairs. To create the questions and answers for this dataset, we implement a logic that utilizes OpenAI. Firstly, OpenAI is employed to generate questions about the content obtained from the scraping process. Subsequently, these questions are fed back into OpenAI, which then generates the corresponding answers. This approach ensures a dynamic and relevant set of question-and-answer pairs based on the scraped content.

- Dataset Preparation: OpenAI requires a specific format for training data, namely a JSON file where each entry comprises a system prompt, the user question, and the assistant’s response.

- Model Fine-Tuning: We fine-tune an OpenAI model, specifically the gpt-3.5-turbo-1106, utilizing OpenAI’s APIs. The fine-tuning process for 1,000 data points takes approximately two hours, resulting in the fine-tuned model identified as ‘ft:gpt-3.5-turbo-1106:personal::8hpLGpEs’.

- Model Integration: To deploy the new model, we replace the reference in our system. Instead of using ‘gpt-4-1106-preview’, we now integrate ‘ft:gpt-3.5-turbo-1106:personal::8hpLGpEs’.

3. Results

To obtain the desired results, we prepared the RAG model by uploading the content scraped from a webpage. For the RAG and the base model, we utilized the “gpt-4-1106-preview” version of OpenAI’s model. Our focus shifted solely to questions related to the content of the webpage, specifically on budgeting. For the fine-tuning model, we only used the content from the same webpage that was utilized for the RAG model. This fine-tuned model was based on the “gpt-3.5-turbo-1106” version of OpenAI’s model. Our comparative analysis then included three variants: the base model, the RAG model, and the specifically fine-tuned model. By adopting this approach, we were able to assess and compare the performance and outputs of each model variant. Accompanying this explanation, we have included a table comparing the answers between the base model, the RAG, and the fine-tuned model. Additionally, the table documents the time each model took to generate each answer. It’s important to note that the response time is subjective as it depends on factors like internet speed, the state of OpenAI’s servers, and the length of the generated answer. However, the key aspect of this addition is to observe the tendency of each model in terms of response time, providing further insight into their operational efficiency.

Note: In this analysis, we only fine-tuned one model of the MoE. Therefore, the documented time reflects only the time taken for the expert model to generate an answer. In future studies, with the implementation of the orchestrator, it will be necessary to include the time taken by the orchestrator to select the appropriate expert model to respond to a user’s question. This will add another layer to our understanding of the operational efficiency of these models, particularly in a multi-expert system.

| Base model | RAG | Fine-tuned |

|---|---|---|

| give me the 5 Budgeting Misconceptions You Should Stop Believing | ||

|

It seems you're looking for advice on budgeting misconceptions. Here are five common misconceptions you should be aware of:

|

The 5 Budgeting Misconceptions You Should Stop Believing are:

|

| Time 13s | Time 39s | Time 7s |

| some misconceptions about budgeting | ||

|

Here are some common misconceptions about budgeting that may hinder financial progress:

|

One of the misconceptions about budgeting is that it's difficult to predict unexpected expenses, however, a portion of a budget can be set aside for such occasions. Another misconception is that creating a budget is time-consuming, but it can be done quickly after initially organizing finances. Lastly, people may believe that the process of budgeting is complicated, but simple applications are available to assist in creating and following a budget. |

| Time 27s | Time 28s | Time 5s |

| What is budgeting? | ||

Budgeting refers to:

|

Budgeting is the process of creating a plan to manage your money, which allows you to:

|

Budgeting is the process of creating a plan for your income and expenses, helping you know how much money is coming in and going out of your accounts each month. It involves itemizing your expected expenses and comparing this against your actual income to determine the best ways to save and to adjust your spending. |

| Time 9s | Time 32s | Time 5s |

| good reasons to implement a budget | ||

|

Good reasons to implement a budget include:

|

Implementing a budget is important for many reasons, including ensuring that you spend less than you earn, allowing you to create an emergency savings fund, making large purchases such as a home or a car, and focusing on your financial goals to avoid additional debt. Budgeting can also help you better understand and manage your spending patterns and save money for future security or retirement. |

| Time 17s | Time 43s | Time 5s |

| How do I budget? | ||

To start budgeting effectively:

|

To create a budget, follow these steps:

|

To create a successful budget, you need to outline your financial goals, set a timeline for them, and define which expenses are essential and which are not. This involves a basic, intermediate, or advanced method, using a 50/30/20 rule, zero-based budgeting, a pay-yourself-first strategy, or envelope budgeting, depending on which suits your financial situation and preferences. Do you have any expenses that aren't covering your costs? |

| Time 13s | Time 31s | Time 4s |

4. Conclusions

- Enhancement of Chatbot Response Quality: The fine-tuning of a single expert model enhanced the quality of the chatbot’s responses, particularly in the domain of budgeting, aligning with the first objective.

- Comparative Analysis: The comparative analysis of the fine-tuned expert model against the original base model and the RAG model demonstrated the MoE approach’s effectiveness. This aligns with the second objective of validating the MoE approach through comparative analysis.

- Domain-Specific Knowledge Integration: The fine-tuned expert model displayed a notable alignment with the budgeting content from the selected website, indicating successful integration of domain-specific knowledge.

- Time Efficiency in Response Generation: the base model outpaces the RAG model in response time due to the latter’s complex information retrieval from vectorDB. However, the fine-tuned model, GPT-3.5-turbo-1106, surpasses even the base model’s speed, which uses GPT-4-1106-preview, showcasing efficiency improvements through fine-tuning.

5. Recommendation

Based on the conclusions, the following recommendations are proposed:

- Dataset Quality Improvement: Refine the dataset used for fine-tuning by removing unnecessary textual elements like “according to the content provided” to streamline and enhance the quality of the responses.

- User Engagement Enhancement: Incorporate calls to action in the chatbot’s dataset, such as encouraging users to consult EarnUp services. This addition will enable the chatbot to include these prompts in its responses, potentially increasing user engagement and providing more directed assistance.

APPENDIX 1: Comparative Analysis Table RAG vs. Fine-Tuned Model

| Aspect | RAG | Fine-tuning Model |

|---|---|---|

| Complexity | Moderate (Requires coding and architectural skills) | High (Demands deep understanding of deep learning, NLP, expertise in data preprocessing, model configuration, and evaluation) |

| Accuracy | Variable (Depends on domain and task) | High (Enhances domain-specific understanding and predictions) |

| Domain Specificity | Moderate (May not capture domain-specific patterns as effectively) | High (Can impart domain-specific terminology and nuances) |

| Up-to-date Responses | High (Ensures updated responses via external documents) | Low (Becomes a fixed snapshot of its training dataset, requires regular retraining for evolving data) |

| Avoidance of Hallucinations | High (Reduces hallucinations by anchoring in retrieved documents) | Moderate (Reduces hallucinations in domain-specific data but unfamiliar queries may still cause errors) |

| Cost Considerations | Requires compute power for embedding models and vector databases | Involves significant compute power and costs for training, data acquisition, and maintenance |

| Dataset Importance | Not specified in the sources | Critical, as the quality and relevance of the dataset significantly impact the effectiveness of fine-tuning |

| Data Dynamics | Excels in dynamic data environments by continuously querying external sources for up-to-date information. | Models become static snapshots of data and may become outdated in dynamic scenarios. |

| Model Customization | Focuses on information retrieval but may not adapt linguistic style or domain-specificity based on retrieved info. | Allows adaptation of LLM’s behavior, writing style, and domain knowledge to specific nuances and terminologies. |

| Suitability for Multiple Tasks | Not specifically designed for task switching. | Efficiency in task switching is untested for LoRA in situations requiring fine-tuning on multiple tasks sequentially. |